DCI – The Digital Commons Initiative

DCI was an initiative kick-started by an ADHO grant which subsequently developed into a TransCoop-Project funded by the Alexander-von-Humboldt Stiftung. The two project leaders of the DCI (Prof. Dr. Jan Christoph Meister, University of Hamburg and Prof. Dr. Stéfan Sinclair, McGill University, Montréal/Canada) have both been engaged in Digital Humanities research for over a decade. In addition to their research in German and French language and literature, both also head teams engaged in DH tools design and development projects. These include Voyeur[1] and tapor[2] (Stéfan Sinclair), catma[3] and agora[4] as well as heureCLÉA and eFoto (Jan Christoph Meister).

_______________________________________________________________________

Context

Over the past decade the humanities have irreversibly „gone digital“: Electronic archives, digital repositories, web resources and a host of platforms and collaborative environments provide access to vast amounts of data that re-present the humanities’ traditional objects of study – texts, images, cultural artifacts, etc. – in digital format, while ‚borne digital’ material originating from electronic media further expands the scope on a daily base.

The use of digital resources has thus become routine practice across the humanities disciplines. This has also changed our perception of Digital Humanities (DH). Considered an area of experimental research up until the late 1990s, many of its methods nowadays impact on traditional humanities disciplines in a number of tangible ways. Most prominent among these are

- the digitization and distribution of scientific object material through electronic media;

- the development of specified digital research tools for humanists;

- the introduction of empirically based computational methods into the hermeneutic disciplines.

Despite the increasing relevance of visualization techniques, one of the dominant practices in the humanities’ day-to-day business remains that of the analysis, exploration and interpretation of primary and/or secondary textual data. Here DH has enabled us to „go digital“ by way of important developments that range from abstract data models to digitization standards, from document type definitions to abstract workflow models – and, last not least: through the development of software applications and systems that respond to our need for digital tools tailored to the specific requirements of one of the humanity’s longest-standing research problems: „What does this text mean?“

Project outline: the Digital Commons Initiative

DH software tools cannot answer this question for us – but they can certainly help us to find answers to it. The first goal of the Digital Commons Initiative (DCI) is to identify such applications and methods, and more particularly to specify and define the key criteria for tools that we categorize as “common”. Common tools and methods

- support literary as well as linguistic text analysis

- can in principle handle texts in any natural language.

The second goal of the DCI is to investigate how such “commonality” can be implemented in concrete systems development projects. In a first step we will combine two existing text analytical applications with different functionalities into a new “common” tool. This tool will then be integrated into an existing web platform and tested for its usability and robustness. By producing this prototype, the DCI project aims to formulate best practice suggestions for future development of “common” DH tools.

The DCI brings together

- German and Canadian Digital Humanities (DH) specialists with a disciplinary affiliation to Linguistics, Literary Studies and/or Media Studies, and with individual research interests in German, English or French language, literature and cultural studies projects, and

- DH systems designers and developers with practical experience in the building of textual analysis tools.

Examples

[1] Voyeur is a web-based text-analysis environment that has been designed to be integrated into remote sites. For details see www.voyeurtools.org

[2] TAPOR (Textual Analysis Portal) presented a first attempt to make a suite of online-analysis tools available via a web portal. This approach has meanwhile been superseded by the ‚plug-in’ model used in Voyeur.

[3] CATMA (Computer Assisted Textual Markup and Analysis) is a stand-alone tool developed at Hamburg University which integrates TEI/XML based markup and analysis functionality. In contrast to Voyeur, CATMA’s emphasis lies on user controlled iterative post-processing (markup and the combination of ‚raw data’ and markup data based analyses). For information on CATMA see www.catma.de

[4] For information on AGORA see www.agora.uni-hamburg.de . The platform, which has a current active user base of approx. 15.000 individuals supports all humanities disciplines at Hamburg University.

Visualization of text data in the Humanities

a Digital Commons Initiative Workshop funded by the Alexander von Humboldt-Foundation

The workshop will be documented on this blog.

The participants can twitter on it under the hashtag #Textviz.

11-14-2013 10:10

Starting off the workshop in the beautiful “Senatssitzungssaal” at the University of Hamburg.

Starting off the workshop in the beautiful “Senatssitzungssaal” at the University of Hamburg.

10:20

After a quick round of introduction Jan Christoph Meister presents his view on visualization in the Humanities abording the big questions for this workshop:

- What are the goals for a visualization?

- How can it help understanding and evaluating the data?

- How can it be used later on by a user?

10:40

Chris Culy gives a talk about how to choose a visualization for a certain task.The talk will be recorded and later on published online. The slides can be found here.

Starting out from an example analysis of the Browning Letters, Chris Culy explaines how to get from the analysis of a corpus to a suiting visualization of the data.

12:10

Presentation of use cases.

Evelyn Gius, Frederike Lagoni, Lena Schüch, Mareike Höckendorff and present their PhD theses and explain what they would ask of a visualization.

Evelyn Gius presents her PhD thesis on the narration of work conflicts.

Frederike Lagoni presents her PhD thesis by the title ”narrative instrospection in fictional and factual narration – a sign of discrepancy”?.

Lena Schüch presents her PhD thesis on the narrativity of english and german song lyrics.

Mareike Höckendorff presents her PhD thesis on the literature of Hamburg.

15:00

After a typical german lunch in the campus canteen the group meets again.

Based on the tasks of a visualization that Chris Culy presented in his talk a general discussion is started.

The results where collected in the table below (click to enlarge).

17:15

After laying the groundwork for the discussions of the coming days the meeting is dissolved for the day.

10:00

Second day of the workshop.

Chris Culy gives a presentation of some techniques and tools relevant for the use cases.

The slides can be found here.

Several examples of visualizations and their specific advantages and disadvantages are discussed.

11:30

The discussion turns to the question of the user:

- How can you get people to use visualizations?

- How can you help an unexperienced user to choose the right visualization for his / her data?

12:15

Eyal Shejter presents his topic modeling tool.

A discussion starts about the use of topic modeling:

- In which types of text can it be useful?

- For which kinds of research can it be useful?

- How do you need to adjust the parameters of the algorithm depending on the text type and the research?

14:20

Stéfan Sinclair presents a few projects like the “mandala browser“.

15:30

Two groups are formed: one is working on a general list of visualizations for certain tasks …

whereas the other one is working on the specific project of Evelyn Gius’ PhD thesis, trying to design a machting tool to visualize her data.

17:15

Both groups present their results to the whole group.

10:00

The group comes together for the final day of the workshop. This time on the 12th floor of the ”Philosophenturm” with a splendid view over the city.

Based on the questions the second group, working on Evelyn’s PhD thesis, encountered a general discussion is raised about how visualizations should adapt to the specific user group of researchers in the Humanities.

12:45

The group tries to collect a few conventions for visualizations of textual data.

14:00

After the lunchbreak the future of the project is discussed.

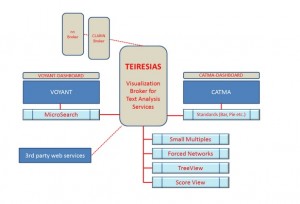

A list of desired implementations for Catma is discussed:

- MicroSearch

- Small Multiples

- Force-Directed Network

- Tree View

- Score View

- Some standart visualizations (bar graphs, pie graphs etc.)

- A Voyant connection

- A visualization dashboard

15:00

Small groups are formed which will try to create prototypes for the discussed implementations.

16:30

Shortly before the workshop comes to an end the results of the groupwork are presented.

The planned architecture for the catma implementations.

Andrew worked on a tree representation of the tagset used in Evelyn’s PhD thesis.

Stéfan worked on the microview.

Jonathan and Matt worked on the Voronoi map.

Jonathan and Matt worked on the Voronoi map.

17:00

Jan Christoph Meister closes the workshop by thanking all the participants for three very interesting and productive days.DCI is a TransCoop-Project funded by the Alexander-von-Humboldt Stiftung. The two project leaders of the DCI (Prof. Dr. Jan Christoph Meister, University of Hamburg and Prof. Dr.Stéfan Sinclair, McGill University, Montréal/Canada) have both been engaged in Digital Humanities research for over a decade. In addition to their research in German and French language and literature, both also head teams engaged in DH tools design and development projects. These include Voyeur[1] and tapor[2] (Stéfan Sinclair), catma[3] and agora[4] as well as heureCLÉA and eFoto (Jan Christoph Meister).

_______________________________________________________________________

Context

Over the past decade the humanities have irreversibly „gone digital“: Electronic archives, digital repositories, web resources and a host of platforms and collaborative environments provide access to vast amounts of data that re-present the humanities’ traditional objects of study – texts, images, cultural artifacts, etc. – in digital format, while ‚borne digital’ material originating from electronic media further expands the scope on a daily base.

The use of digital resources has thus become routine practice across the humanities disciplines. This has also changed our perception of Digital Humanities (DH). Considered an area of experimental research up until the late 1990s, many of its methods nowadays impact on traditional humanities disciplines in a number of tangible ways. Most prominent among these are

- the digitization and distribution of scientific object material through electronic media;

- the development of specified digital research tools for humanists;

- the introduction of empirically based computational methods into the hermeneutic disciplines.

Despite the increasing relevance of visualization techniques, one of the dominant practices in the humanities’ day-to-day business remains that of the analysis, exploration and interpretation of primary and/or secondary textual data. Here DH has enabled us to „go digital“ by way of important developments that range from abstract data models to digitization standards, from document type definitions to abstract workflow models – and, last not least: through the development of software applications and systems that respond to our need for digital tools tailored to the specific requirements of one of the humanity’s longest-standing research problems: „What does this text mean?“

Project outline: the Digital Commons Initiative

DH software tools cannot answer this question for us – but they can certainly help us to find answers to it. The first goal of the Digital Commons Initiative (DCI) is to identify such applications and methods, and more particularly to specify and define the key criteria for tools that we categorize as “common”. Common tools and methods

- support literary as well as linguistic text analysis

- can in principle handle texts in any natural language.

The second goal of the DCI is to investigate how such “commonality” can be implemented in concrete systems development projects. In a first step we will combine two existing text analytical applications with different functionalities into a new “common” tool. This tool will then be integrated into an existing web platform and tested for its usability and robustness. By producing this prototype, the DCI project aims to formulate best practice suggestions for future development of “common” DH tools.

The DCI brings together

- German and Canadian Digital Humanities (DH) specialists with a disciplinary affiliation to Linguistics, Literary Studies and/or Media Studies, and with individual research interests in German, English or French language, literature and cultural studies projects, and

- DH systems designers and developers with practical experience in the building of textual analysis tools.

Examples

[1] Voyeur is a web-based text-analysis environment that has been designed to be integrated into remote sites. For details see www.voyeurtools.org

[2] TAPOR (Textual Analysis Portal) presented a first attempt to make a suite of online-analysis tools available via a web portal. This approach has meanwhile been superseded by the ‚plug-in’ model used in Voyeur.

[3] CATMA (Computer Assisted Textual Markup and Analysis) is a stand-alone tool developed at Hamburg University which integrates TEI/XML based markup and analysis functionality. In contrast to Voyeur, CATMA’s emphasis lies on user controlled iterative post-processing (markup and the combination of ‚raw data’ and markup data based analyses). For information on CATMA see www.catma.de

[4] For information on AGORA see www.agora.uni-hamburg.de . The platform, which has a current active user base of approx. 15.000 individuals supports all humanities disciplines at Hamburg University.

Visualization of text data in the Humanities

a Digital Commons Initiative Workshop funded by the Alexander von Humboldt-Foundation

The workshop will be documented on this blog.

The participants can twitter on it under the hashtag #Textviz.

11-14-2013 10:10

Starting off the workshop in the beautiful “Senatssitzungssaal” at the University of Hamburg.

Starting off the workshop in the beautiful “Senatssitzungssaal” at the University of Hamburg.

10:20

After a quick round of introduction Jan Christoph Meister presents his view on visualization in the Humanities abording the big questions for this workshop:

- What are the goals for a visualization?

- How can it help understanding and evaluating the data?

- How can it be used later on by a user?

10:40

Chris Culy gives a talk about how to choose a visualization for a certain task.The talk will be recorded and later on published online. The slides can be found here.

Starting out from an example analysis of the Browning Letters, Chris Culy explaines how to get from the analysis of a corpus to a suiting visualization of the data.

12:10

Presentation of use cases.

Evelyn Gius, Frederike Lagoni, Lena Schüch, Mareike Höckendorff and present their PhD theses and explain what they would ask of a visualization.

Evelyn Gius presents her PhD thesis on the narration of work conflicts.

Frederike Lagoni presents her PhD thesis by the title ”narrative instrospection in fictional and factual narration – a sign of discrepancy”?.

Lena Schüch presents her PhD thesis on the narrativity of english and german song lyrics.

Mareike Höckendorff presents her PhD thesis on the literature of Hamburg.

15:00

After a typical german lunch in the campus canteen the group meets again.

Based on the tasks of a visualization that Chris Culy presented in his talk a general discussion is started.

The results where collected in the table below (click to enlarge).

17:15

After laying the groundwork for the discussions of the coming days the meeting is dissolved for the day.

10:00

Second day of the workshop.

Chris Culy gives a presentation of some techniques and tools relevant for the use cases.

The slides can be found here.

Several examples of visualizations and their specific advantages and disadvantages are discussed.

11:30

The discussion turns to the question of the user:

- How can you get people to use visualizations?

- How can you help an unexperienced user to choose the right visualization for his / her data?

12:15

Eyal Shejter presents his topic modeling tool.

A discussion starts about the use of topic modeling:

- In which types of text can it be useful?

- For which kinds of research can it be useful?

- How do you need to adjust the parameters of the algorithm depending on the text type and the research?

14:20

Stéfan Sinclair presents a few projects like the “mandala browser“.

15:30

Two groups are formed: one is working on a general list of visualizations for certain tasks …

whereas the other one is working on the specific project of Evelyn Gius’ PhD thesis, trying to design a machting tool to visualize her data.

17:15

Both groups present their results to the whole group.

10:00

The group comes together for the final day of the workshop. This time on the 12th floor of the ”Philosophenturm” with a splendid view over the city.

Based on the questions the second group, working on Evelyn’s PhD thesis, encountered a general discussion is raised about how visualizations should adapt to the specific user group of researchers in the Humanities.

12:45

The group tries to collect a few conventions for visualizations of textual data.

14:00

After the lunchbreak the future of the project is discussed.

A list of desired implementations for Catma is discussed:

- MicroSearch

- Small Multiples

- Force-Directed Network

- Tree View

- Score View

- Some standart visualizations (bar graphs, pie graphs etc.)

- A Voyant connection

- A visualization dashboard

15:00

Small groups are formed which will try to create prototypes for the discussed implementations.

16:30

Shortly before the workshop comes to an end the results of the groupwork are presented.

The planned architecture for the catma implementations.

Andrew worked on a tree representation of the tagset used in Evelyn’s PhD thesis.

Stéfan worked on the microview.

Jonathan and Matt worked on the Voronoi map.

17:00

Jan Christoph Meister closes the workshop by thanking all the participants for three very interesting and productive days.